Fighting “bad science” in the information age: The effects of an intervention to stimulate evaluation and critique of false scientific claims

Abstract

With developments in technology (e.g., “Web 2.0” sites that allow users to author and create media content) and the removal of publication barriers, the quality of science information online now varies vastly. These changes in the review of published science information, along with increased facility of information distribution, have resulted in the spread of misinformation about science. As such, the role of evaluation when reading scientific claims has become a pressing issue when educating students. While recent studies have examined educational strategies for supporting evaluation of sources and plausibility of claims, there is little extant work on supporting students in critiquing the claims for flawed scientific reasoning. This study tested the efficacy of a structured reading support intervention for evaluation and critique on cultivating a critical awareness of flawed scientific claims in an online setting. We developed and validated a questionnaire to measure epistemic vigilance, implemented a large-scale (N = 1081) Randomized Controlled Trial (RCT) of an original reading activity that elicits evaluation and critique of scientific claims, and measured whether the intervention increased epistemic vigilance of misinformation. Our RCT results suggested a moderate effect in students who complied with the treatment intervention. Furthermore, analyses of heterogeneous effects suggested that the intervention effects were driven by 11th-grade students and students who self-reported a moderate trust in science and medicine. Our findings point to the need for additional opportunities and instruction for students on critiquing scientific claims and the nature of specific errors in scientific reasoning.

1 RESEARCH PROBLEM

With the advent of recent Web technologies, the landscape of information available to the public has changed dramatically. While more information is available than ever, there is a greater amount of false or misleading information that is published, distributed and consumed by the public (Barzilai & Chinn, 2020; Bromme & Goldman, 2014; Graesser, Millis, D'Mello, & Hu, 2014; Kiili, Laurinen, & Marttunen, 2008; Lewandowsky, 2020; Rojecki & Meraz, 2016). New web technologies have facilitated these issues, allowing scientific misinformation to spread widely and rapidly without the gatekeeping of traditional media publication standards (Moran, 2020; Zarocostas, 2020).

Recent concerns about the societal impacts of misinformation have raised the question of how to develop youth as critical thinkers who can evaluate flawed information (Kavanagh & Rich, 2018). In particular, public understanding of science has faced challenges from Web-based misinformation, as demonstrated by the role of false media claims in complex scientific issues such as the modern vaccine controversy (see Briones, Nan, Madden, & Waks, 2012; Wilson, Paterson, & Larson, 2014). In addition to these challenges, society also grapples with the influence of a “post-truth era” (McIntyre, 2018), in which the public values personal beliefs and emotions more in decision-making than tested and validated knowledge (Sinatra & Lombardi, 2020). The impacts of these effects are particularly concerning for young learners of science, as their epistemic and content knowledge is still developing. While the problem of reasoning with erroneous information has been studied by researchers in cognitive psychology (Halpern, 2002; Kahneman, 2011; Sperber et al., 2010), research on pedagogical methods to address this issue is still emergent. As such, it is necessary to develop and assess the efficacy of the strategies that students need to improve their ability to assess claims about scientific issues.

The present study tested an intervention targeting the evaluation and critique of flawed scientific claims in a Web-based media context. Specifically, we investigated the question, “Does prompting for critique heighten students' ability to be vigilant of flaws in scientific information?” In this study, we implemented an intervention designed to induce epistemic vigilance, or an assessment of the believability of communicated information (Sperber et al., 2010), by prompting for critiques of scientific claims. This intervention was conducted in 43 high school science classes at seven schools in California in 2017. We measured students' epistemic vigilance in a pre-test, used block randomization to assign students to the intervention within classrooms, had students participate in their assigned condition, and then assessed their post-level of epistemic vigilance. The findings of this study offer guidance about whether a critical reading intervention can strengthen students' epistemic vigilance and provide insight into design considerations for such interventions in classroom settings.

2 BACKGROUND

2.1 The science information landscape: A rapid evolution and decay

Previously, the interpretation of scientific findings has remained largely in the hands of science experts (Halliday & Martin, 1994), or was communicated to the public through textbooks and traditional print media (McClune & Jarman, 2010; Myers, 1992). Today, with the affordances of Internet technology, people are now able to access large quantities of information about science of varying quality and validity (Fernández-Luque & Bau, 2015; National Academies of Science & Medicine, 2016). Additionally, non-experts are able to easily publish and re-distribute any scientific claims via social media (Allcott & Gentzkow, 2017; Fernández-Luque & Bau, 2015; Keller, Labrique, Jain, Pekosz, & Levine, 2014; Lee & Ma, 2012; Sinatra & Lombardi, 2020). As a result, scientifically flawed arguments have circulated widely, challenging long-established scientific conclusions (Barzilai & Chinn, 2020; Betsch & Sachse, 2012; Briones et al., 2012; Wilson et al., 2014).

2.2 Defining science misinformation

Misinformation is partially or entirely incorrect information that is distributed without the intent to deceive. Disinformation is inaccurate information shared with an intent to deceive or harm (Wardle & Derakhshan, 2017). In this study, we define scientific misinformation as information that contains scientific errors or erroneous ideas about science, but do not address intent, since in the present study it is difficult to establish intent when misinformation is communicated. We draw from social theories of ignorance by Smithson (1985), in which erroneous ideas can be characterized by incompleteness (i.e., in the form of omission or ambiguity) or distortion (i.e., in the form of mistaken information). For example, incompleteness in scientific claims may include claims taken out of the study's context; whereas distortions of science may involve biased assessments of data (Allchin, 2001). This definition of misinformation encompasses content that contains inaccuracies about scientific concepts and interpretations using flawed scientific reasoning, regardless of the author's intent.

2.3 Defending youth against misinformation: The role of education

An emerging area of research focuses on educating youth at the K-12 level to confront complexities in the contemporary information landscape, particularly as the education of adults would require voluntary participation and overcoming entrenched values and beliefs (Kavanagh & Rich, 2018; Petty & Wegener, 1999). Some researchers of political science and communication have suggested that without training to reason with new media, the current generation of students will become adults who are vulnerable to misleading information or digital deception (Kavanagh & Rich, 2018). Such fears are not unfounded, as recent research demonstrates that students, despite being raised in a digital media environment, are still prone to accepting false information from Internet resources (Cheng, Bråten, Yang, & Brandmo, 2021; Tseng, 2018) and trust misinformation in Web-based media as much as they trust accurate news (Wineburg and Stanford History Education Group, 2016). Furthermore, research in science education has repeatedly suggested that school-age students accept claims in science articles without questioning the supporting evidence or reasoning (see Norris & Phillips, 1994; Ratcliffe, 1999). These studies suggest that students may view scientific claims in media as objective, failing to examine the evidence supporting the claims. Overall, because students struggle with both evaluating scientific claims and assessing the veracity of web-based information, the need for education on reasoning with scientific claims in online settings is warranted.

3 THEORETICAL FRAMING AND RELEVANT LITERATURE

3.1 “Thinking slow” to maintain epistemic vigilance against misinformation

This study is based on the theoretical notion that people can avoid falling prey to deception by consciously enabling a critical awareness, or epistemic vigilance, of communicated messages (Kahneman, 2011; Sperber et al., 2010). Sperber et al. (2010) describe epistemic vigilance as “not the opposite of trust; the opposite of blind trust (p. 235),” and they argue that trustful communication between individuals can only be possible if humans possess cognitive mechanisms to identify what is untrue. By doing so, individuals limit potential harm of relying on testimony without question (Gierth & Bromme, 2020). Epistemic vigilance can be achieved by “thinking slow” or thinking critically to assess a communicator's perceived competence and benevolence, and to evaluate the validity of claims and justifications (Kahneman, 2011; Sperber et al., 2010). Research in cognitive psychology and philosophy has suggested consistently that humans are trustful by nature and are inclined to accept information they are presented with, especially when the information is familiar and confirms their pre-existing attitudes (Gilbert, Krull, & Malone, 1990; Mercier & Sperber, 2011; Nickerson, 1998; Petty & Wegener, 1999; Swire, Ecker, & Lewandowsky, 2017).

Additionally, in today's information landscape, competing claims are abundant and come from sources of wide-ranging quality, heightening the risk of being misinformed. As such, the act of assessing information critically is not an intuitive process, but one that requires a great deal of deliberation and effort (Halpern, 2002; Kahneman, 2011; Sperber et al., 2010). Research in cognitive science has suggested that thinking takes on two broad forms—System 1 “Fast” Thinking, which is effortless and largely based on intuition, and System 2 “Slow” Thinking, which is effortful and deliberate (Halpern, 2002; Kahneman, 2011).1 System 1 “Fast” Thinking allows for swift decisions in the absence of time and information (Gigerenzer, 2007), but at the cost of limitations to human cognition such as bounded rationality (Simon, 1978) and distortions of social and emotional biases (Halpern, 2002). In contrast, System 2 “Slow” Thinking involves the effortful task of evaluation—judgment of a source's credibility, weighing of evidence, assessment of risk, and evaluation of arguments—actions that comprise the process of thinking critically.

Because students are still developing in their content-area knowledge, they are especially prone to the vulnerabilities of intuition and “Fast” Thinking, such as the use of mental shortcuts to evaluate information (Halpern, 2002; Petty & Wegener, 1999). As such, there is a pressing need to support students in the process of “thinking slow” about science and to avoid “fast and frugal heuristics” (Tabak, 2020), with the goal of maintaining epistemic vigilance against flawed scientific arguments.

3.2 Maintaining epistemic vigilance in the context of evaluating scientific claims

So, how does one “think slow” and maintain epistemic vigilance specifically in the context of evaluating scientific claims? Critically evaluating scientific claims requires competencies of argument, analysis and critique (NRC, 2012). Scholars in science education suggest that laypeople evaluate both what is said (i.e., a first-hand evaluation of the claims) and “who said it” (i.e., a second-hand evaluation of the source's trustworthiness) (Bromme & Goldman, 2014; Sharon & Baram-Tsabari, 2020). For example, a first-hand evaluation of the claim may involve the assessment of scientific arguments for validity of reasoning and the quality of evidence that is used to support claims, while a second-hand evaluation of the claim might involve consideration of the source's special interests and biases. Likewise, an increasingly large body of research suggests that to establish the validity of any scientific idea, students must evaluate ideas by considering alternative interpretations of the text, asking questions of the source's intentions and challenge claims and ideas (see Pearson, Moje, & Greenleaf, 2010). In response to misinformation about science, recent research in education has examined various strategies to scaffold evaluation, but “current methods of teaching… evaluation of online scientific information are up against an arms race of escalating information” (Sinatra & Lombardi, 2020, p. 127). Next, we examine extant research on scaffolding evaluation.

3.3 Evaluating “Who Said It”: Incomplete solutions for maintaining epistemic vigilance

There are two main strategies for evaluating a source's trustworthiness. The first strategy for maintaining vigilance is depending on experts for reliable information. The identification of misinformation is often framed as a matter of trust, solved by consulting reliable knowledge from subject-matter experts who have the depth of knowledge in a particular domain (Baram-Tsabari & Osborne, 2015). For laypeople, this may mean recognizing when science is relevant to their needs and interacting with sources of expertise to meet their needs (Feinstein, 2011). However, limitations of this perspective include a lack of consensus among experts themselves on controversial topics in science, and a lack of prior knowledge to interact with sources of expertise meaningfully (Sharon & Baram-Tsabari, 2020). Notably, some experts' interests are misaligned with members of the public who depend on them—for example, experts who have contributed to misinformation campaigns or false claims (e.g. the link between vaccines and autism) (Sharon & Baram-Tsabari, 2020).The second strategy is sourcing, which involves interacting with reliable scientific expertise to evaluate the sources of claims. A robust body of literature focuses specifically on how to support students in the practice of sourcing, which requires attending to, evaluating, and using available or alternative information about the origin of documents.2 Researched strategies often involve evaluating the source of claims in media (Stadtler, Scharrer, Macedo-Rouet, Rouet, & Bromme, 2016; Wineburg and Stanford History Education Group, 2016).

The evaluation of sources is important, painstaking work and research suggests that students do not spontaneously engage in this activity while reading online (Scharrer & Salmerón, 2016). Still, several recent studies have shown that students can become better at sourcing (e.g., Bråten, Brante, & Strømsø, 2019; McGrew et al., 2019), though sourcing has several notable limitations (Sinatra & Lombardi, 2020). First, mastery in sourcing requires specialized background knowledge of the unique attributes of Internet media, such as virality (e.g. Törnberg, 2018), and special interests and biases of media reporting. Second, readers experience difficulty finding the source of information that is detached from content—a common complexity of web media (Stadtler & Bromme, 2008). Third, rapid changes in the online information landscape consistently outpaces what we have learned from research (Breakstone, McGrew, Smith, Ortega, & Wineburg, 2018). Fourth, the identities of communicators on the Web are easy to manipulate and use for deception (see Hancock, 2007). Finally, researchers also have identified contemporary concerns driven by Internet media, such as false-consensus effects, echo chambers and confirmation bias (see Höttecke & Allchin, 2020). Clearly, supporting students in the practice of sourcing is an important aspect of critically evaluating scientific information, yet this work is not without significant challenges and does not offer a complete solution for maintaining vigilance.

3.4 Strategies for evaluating “What is Said”

In addition to evaluating sources for reliability, epistemic vigilance calls upon the reader to evaluate the claims themselves. Furthermore, recent research suggests that when source information is insufficient to establish the epistemic validity of the source's claim, people rely more heavily on evaluating the direct validity of the claim as they do not have a cognitive mechanism to identify untrustworthiness of the source (Gierth & Bromme, 2020).

Therefore, while source evaluation certainly plays a crucial role in epistemic vigilance against misinformation, it is only a partial solution that must be complemented by instruction that supports students in effectively evaluating claims and justifications.

One strategy is to explicitly reappraise plausibility judgments. Central to this strategy is determining whether an explanation is plausible and assessing the available evidence in support of the explanation. Relevant research on plausibility judgments have included the Plausibility Judgments in Conceptual Change model (Lombardi, Nussbaum & Sinatra, 2016), in which individuals pre-process validity of the information source based on characteristics including alignment with existing background knowledge, perceived uncertainty, and source credibility. Moreover, reappraisal of plausibility judgments requires explicit cognitive processing, requiring “cognitive decoupling” or critically gauging merits of alternative explanations by alignment with different types of evidence (Evans & Stanovich, 2013, p. 236). Limitations include implicitly formed plausibility judgments that fall back on persuasive nonscientific explanations, especially when individuals do not have well-formed ideas about a phenomenon (Sinatra & Lombardi, 2020). Additionally, emotions play a complex role—individuals must be motivated to decouple, yet also willing to inhibit non-productive emotions (e.g. anger, hopelessness) that may interfere with cognitive evaluation (Lombardi & Sinatra, 2013).

To address these challenges, researchers have developed instructional scaffolds—for example, Model-Evidence-Link (MEL) diagrams—to help students engage in purposeful processing of claims and evidence, helping individuals consider novel explanations and alternative explanations (Chinn & Buckland, 2012, Lombardi, Bailey, Bickel, & Burrell, 2018; Lombardi, Sinatra, & Nussbaum, 2013). MEL activities require students to evaluate evidence for whether the evidence supports, contradicts or is irrelevant to a model. Research reveals the effectiveness of MEL diagrams as scaffolds for evaluating plausibility (Lombardi et al., 2018), but also raises the question of how students can effectively make this evaluation—particularly when they have little content knowledge to draw from and may be swayed by emotions and unscientific motivations.

3.5 Improving the evaluation of “what is said:” supporting critique of scientific claims to bolster epistemic vigilance

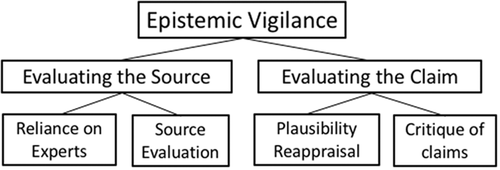

So, how do we bolster epistemic vigilance when content knowledge is limited and available information about sources is scarce? Sperber et al. (2010) suggest that when epistemic vigilance becomes active due to the risk of deception, individuals look to falsify potentially deceptive claims. Additionally, some experiments yield evidence demonstrating that when epistemic vigilance targets potentially untrustworthy sources, individuals make stronger cognitive efforts to be critical of claims (Copeland, Gunawan, & Bies-Hernandez, 2011; Gierth & Bromme, 2020). Taken together, epistemic vigilance is bolstered when there are questions of the source's trustworthiness. It follows that prompting for critique or questioning of claims and their sources could amplify epistemic vigilance. Figure 1 depicts the aspects of epistemic vigilance summarized so far and identifies, in the bottom right corner, the focus of the present study: critique of scientific claims.

To construct reliable scientific knowledge, it is necessary to not only evaluate and re-appraise plausibility, but it is also necessary to critique (Ford, 2008; Henderson, MacPherson, Osborne, & Wild, 2015; National Research Council, 2012). Evaluation and critique are both central to the scientific process that links investigation with explanations. Despite the importance of both processes, critique has been a neglected component in the construction of scientific knowledge (Ford, 2008; Henderson et al., 2015; Osborne et al., 2016). For example, the Next Generation Science Standards narrowly addresses the importance of evaluation or critique of scientific information, particularly in the context of controversial science issues and the role of Web media, which facilitates wide publication and distribution of non-expert claims (NCES, 2012).

Critique and questioning of scientific claims require an awareness of relevant questions that can be asked, and potential flaws that can be questioned from a scientific standpoint (see Allchin, 2001). Flaws in scientific reasoning include deviations from appropriate scientific practices (e.g., sampling errors, lack of comparison groups, overgeneralization of conclusions, biased reporting of research), a disregard for alternative interpretations of data, illogical conclusions, and the omission of details in reporting of scientific research (Allchin, 2001; Ryder, 2001). Some studies have suggested ways for students to learn about scientific error, particularly in conjunction with teaching the nature of science and the tentative nature of scientific research that is in progress. For example, some have advocated for the use of historical case studies involving errors in scientific research as the basis for classroom instruction on the nature of science (Allchin, 2001). Notably, the medical field has examined numerous strategies of critiquing for error, focusing on improving the evaluation of clinical evidence (Berner & Graber, 2008; Bonini, Plebani, Ceriotti, & Rubboli, 2002; Nyssen & Blavier, 2006; Strobel, Heitzmann, Strijbos, Kollar, & Fischer, 2016). However, empirical studies focusing on the critique of scientific claims, particularly misleading or inaccurate claims, are rare in K-12 educational research.

An increasingly large body of literature in science education already underscores the need for students to practice evaluating ideas, but students rarely engage actively in the process of challenging claims and ideas (see Tseng, 2018; Lemke, 1990; Lombardi et al., 2013; Pearson, Moje, & Greenleaf, 2010). In one recent study (Tseng, 2018), high school students thought aloud (Ericsson & Simon, 1980) with a Web media text containing erroneous claims about vaccination safety. While some students were initially critical of the article, other students who immediately accepted the author's flawed claims as credible changed their stance when directed to critique the article. These findings suggest that if students are given the opportunity to critique, they may evaluate claims more thoroughly and thus, become more critical of misinformation.

Thus, in this study, we sought to investigate this gap in evaluation strategies to tackle misinformation, focusing on supporting students in the practice of critique. This study proposes that to reason effectively with science misinformation on the Internet, it is necessary for students to not only evaluate sources for reliability, but also to evaluate claims for quality of reasoning and, specifically, to question and challenge potential flaws in these claims. Therefore, we posit that cultivating epistemic vigilance towards science misinformation requires structured opportunities for students to question and critique potential threats to scientific validity. Our two contributions include the development of an intervention designed to increase students' epistemic vigilance by directing them to “think slow” and question potential errors in the claims, in addition to rigorously evaluating the intervention in the field with over 1000 students using a randomized control trial.

4 STUDY OVERVIEW

4.1 Using a reading activity: rationale

We developed a reading activity to bolster epistemic vigilance due to the crucial role of critical reading in interpreting scientific texts (Norris & Phillips, 2003; Shanahan & Shanahan, 2008). Critical reading (see Cottrell, 2011) involves evaluating information by asking relevant questions of the author's intentions, identifying biases, evaluating the quality and volume of sources used as evidence, and assessing the strength of the author's reasoning. Existing research has identified the need for critical reading as a useful strategy for students to approach Web-based media (Kavanagh & Rich, 2018), particularly due to the lack of editorial review in user-generated Web media and the prevalence of text-based interactions in Web media (Hancock, 2007). A large body of literature demonstrates that literacy requires support, underscoring the use of strategies for better comprehension and meta-cognition that are concurrent with the process of reading (see Barry, 2002; Pressley, 1998). Some of these strategies specifically engage students in active interrogation of text for improving comprehension, including the use of anticipation guides that provoke disagreement (Kozen, Murray, & Windell, 2006). Previous research has also examined the utility and effectiveness of teaching students to generate questions (see Chin & Osborne, 2008), and specifically, questioning the intentions of the author (Beck, McKeown, Sandora, Kucan, & Worthy, 1996). Overall, questioning strategies have been found to foster comprehension and metacognition (Palincsar & Brown, 1984; Wong, 1985), resulting in a more active processing of text (Craik & Lockhart, 1972; Singer, 1978). Furthermore, and more relevant to the reading of misinformation, these strategies assist in the control of premature or erroneous conclusions (Rosenshine, Meister, & Chapman, 1996). Thus, support for critical reading would theoretically contribute to a more vigilant stance towards inaccurate claims, including those in online settings.

4.2 Experimental conditions

In the present study, we conducted a field-based experiment to test the use of a reading guide in which students were supported and induced to critique scientific claims. The present intervention builds upon existing critical reading activities that elicit questioning of the text and the author's intentions by also including additional supports for challenging the author's scientific arguments and detecting potential errors in reasoning. The aim was to encourage the reader to consider additional scientific context that may be necessary to evaluate a claim, and ways in which the author may be wrong. The ultimate goal of the intervention, therefore, was to improve epistemic vigilance at the non-expert level by providing structured opportunities to question the validity of poorly reasoned scientific claims.

The treatment was a reading activity that included reading the aforementioned media-genre article accompanied by an anticipation guide (i.e., critical reading guide) that prompted students to evaluate and critique the text for potential flaws or errors in its scientific arguments. Our reading activity was modeled after previously developed reading tasks for the evaluation of texts (Barton, Heidema, & Jordan, 2002; Snow, Griffin, & Burns, 2005), with additional questions that probed for errors in scientific reasoning (Allchin, 2001) and critique for flaws in argumentation (Ford, 2008; Henderson et al., 2015). Students in the study did not receive additional instruction in scientific reasoning or vigilance against scientific misinformation as part of the present study.

Participants in both conditions read two persuasive, media-genre articles: one at the start of the study, and the second after the reading activity was administered. Each contained persuasive claims about similar science issues that contained parallel misrepresentations of scientific reasoning and biased language (see Supplementary Material for the two complete texts). We designed each article to be potentially critiqued for flawed scientific reasoning, subjective language that is characteristic of media articles, and for quality and quantity of cited sources and author's credentials (Allchin, 2001).

To develop the two texts, we followed a rigorous multi-step process to ensure equivalency. We engaged three scientists in qualitative tests of face validity. The scientists participated in Think Aloud interviews with draft texts to identify potential critiques. The researchers ensured that both texts contained equivalent lexical and grade level content.3 By ensuring that the readings were of similar content and difficulty, we were able to directly compare student's progress in response to the intervention.

In Table 2, we provide the questions used in the reading guide and examples of participant responses.

Students randomized to the control group were issued a reading activity that did not explicitly prompt for critique of the article's arguments.4 The control condition asked students to read the same media-genre articles followed by a prompt to write a letter to a friend summarizing the article. Notably, the control condition did not prompt students to critique or evaluate the text's claims, though it may have induced students to “think slow.” It is noteworthy that this occurrence would result in less differentiation between the treatment and control conditions and likely result in understating the true impact of the treatment intervention. The control condition was designed to assist in the double-blind nature of the study whereby control students had a task that would occupy their time similar to their peers in the treatment condition.

5 DATA

5.1 Sample

Students in grades 9–12 from 43 classes across seven comprehensive California public high schools participated in the experiment from April to May of 2017. Ten teachers volunteered their classes for the study after a call for participants was emailed to the researcher's network (i.e., sample of convenience). In total, 1081 students were randomized to a treatment condition, consented to participating in the study and were present during the intervention (i.e., not absent for the entire study period).5 All participants were provided information about the nature of the study without disclosing details of the treatment or the goal of the experiment. To construct our analytic sample, we include the 902 participants who completed both the pre and post-survey—60 students completed only the intervention and post-survey and an additional 119 students completed the pre-survey only. In the results section we describe attrition in more detail.

5.2 Dependent variable: Epistemic vigilance

Our main outcome of interest, epistemic vigilance, was measured at both baseline (“Pre-survey”) and after the intervention (“Post-survey”). We developed and validated a survey instrument designed to measure participants' epistemic vigilance (i.e., critical awareness) as a theoretical output of the individual-level effects of critiquing scientific claims for potential errors. Specifically, we designed the survey items to measure the student's vigilance when evaluating the claim's source and the claim itself. The questions on the claim's source evaluated students' perceptions of the author's credibility, the media source's reliability, and the perceived expertise of the author (e.g., “does the webpage appear to be a reliable source of information about the topic?”). The items assessing the claims themselves evaluated students' perceptions of the author's reasoning, and the quality of his arguments based on scientific reasoning and usage of scientific evidence (e.g., “would you share this author's claims as good information to know?”). The instrument validation process included multiple iterations of cognitive pre-testing, an initial pilot study and further factor analysis to ensure the validity and reliability of our epistemic vigilance construct (see Supplementary Materials).6 Our final measure was based on the simple mean of nine multiple-choice items that utilized a 5-point Likert rating scale, all of which loaded on a single factor representing epistemic vigilance. The baseline pre-survey average for epistemic vigilance was 2.79 and the post-survey mean increased to 2.82 (Table 1).

| Variable | Mean | SD | Minimum | Maximum | Sample |

|---|---|---|---|---|---|

| Treatment | 0.494 | 0.500 | 0 | 1 | 1081 |

| Attrition (started survey) | 0.110 | 0.313 | 0 | 1 | 1081 |

| Attrition (finished survey) | 0.256 | 0.437 | 0 | 1 | 1081 |

| Baseline epistemic vigilance | 2.796 | 0.717 | 1 | 4.8 | 1015 |

| Missing baseline epistemic vigilance | 0.061 | 0.240 | 0 | 1 | 1081 |

| Post epistemic vigilance | 2.823 | 0.793 | 1 | 5 | 804 |

| Missing post epistemic vigilance | 0.256 | 0.042 | 0 | 1 | 1081 |

| Gender | |||||

| Female | 0.455 | 0.498 | 0 | 1 | 1009 |

| Other gender | 0.043 | 0.204 | 0 | 1 | 1009 |

| Missing gender | 0.067 | 0.249 | 0 | 1 | 1081 |

| Race/ethnicity | |||||

| White | 0.256 | 0.437 | 0 | 1 | 1007 |

| Black | 0.059 | 0.236 | 0 | 1 | 1007 |

| Hispanic/Latino | 0.412 | 0.492 | 0 | 1 | 1007 |

| Asian/Pacific Islander | 0.099 | 0.299 | 0 | 1 | 1007 |

| Native American | 0.008 | 0.091 | 0 | 1 | 1007 |

| Other | 0.097 | 0.296 | 0 | 1 | 1007 |

| Missing race/ethnicity | 0.068 | 0.253 | 0 | 1 | 1081 |

| Grade level | |||||

| Grade 9 | 0.301 | 0.459 | 0 | 1 | 1009 |

| Grade 10 | 0.312 | 0.463 | 0 | 1 | 1009 |

| Grade 11 | 0.260 | 0.439 | 0 | 1 | 1009 |

| Grade 12 | 0.061 | 0.240 | 0 | 1 | 1009 |

| Missing grade level | 0.067 | 0.249 | 0 | 1 | 1081 |

| Age | |||||

| Age | 15.62 | 1.106 | 14 | 19 | 1009 |

| Missing age | 0.067 | 0.249 | 0 | 1 | 1081 |

| Home language | |||||

| Other language spoken at home | 0.512 | 0.500 | 0 | 1 | 1009 |

| Missing home language | 0.067 | 0.249 | 0 | 1 | 1081 |

| Attitudes | |||||

| Interest in science | 3.222 | 0.942 | 1 | 5 | 1020 |

| Trust in western medicine | 3.603 | 0.752 | 1 | 5 | 1020 |

| Prior experience | |||||

| Instruction on reading online media | 0.825 | 0.380 | 0 | 1 | 1021 |

| Instruction in science online media | 0.578 | 0.494 | 0 | 1 | 1021 |

| Ever in AP/Honors Science | 0.150 | 0.357 | 0 | 1 | 1081 |

| Ever in AP/Honors ELA | 0.176 | 0.381 | 0 | 1 | 1081 |

- Note: Data were self-reported by participants in the Pre-survey. Each cell in the “Mean” column shows the average value of all reported values from participants (for binary variables, this value falls between 0 and 1). The reference range for each variable is shown in the “Minimum” and “Maximum” columns—for example, binary variables have a minimum of 0, representing a negative response to the variable, and a maximum of 1, representing a positive response to the variable. The sample size used to calculate the Mean is shown in the rightmost column. The sample, at maximum, is reported to be 1081 student participants. Values that were not reported by all students resulted in sample sizes <1081 for the variable.

| 1. The author says: | Distemper is so rare, there's no need to vaccinate against it. | |

| 2. What you think: | True | False |

| 3. What the author thinks: | True | False |

| 4. Why do you think the author made this claim? | The author made this claim to warn readers about the vaccine. | |

5. What's the author's evidence? |

Two studies show it causes pain and complications. | |

| 6. What makes this article credible as science information? | The author mentioned studies. They have actual stats on distemper and vaccines. |

|

| 7. What additional information is missing from this claim (if any?) to convince you more? | More studies to prove it Newer studies Other side's viewpoint |

|

| 8. What other explanations for the author's viewpoint are there? | Maybe it's rare because the vaccine has worked this whole time. | |

| 9. Do you think the author understood the evidence correctly? Why or why not? | It's not a lot of studies I'm not sure how many people they surveyed |

|

| 10. What might influence the author's viewpoints? | They really, really love their dogs so they might always get upset when their dog gets a shot They might dislike their vet |

|

| 11. How might the author be wrong? | The author might be ignoring other studies that show the opposite, but they have not named any. | |

- Note: Row 1 contains given claims from the Claim Text. Rows 2–5 are exercises that are modeled after other reading guides used for evaluation of text (Snow et al., 2005) and allow students to compare the author's arguments to their own background knowledge. Rows 7–10 probe the reader for omitted context and potential distortions—two categories of erroneous information—to support the reader in critiquing the text, bolstering the reader's consideration for potential biases and alternative explanations for evidence. Row 11 prompts students to critique the author.

| Control | Treatment | |

|---|---|---|

| Pre-treatment phase (Day 1, on-site), presented/submitted on Qualtrics web survey software | Students read “Claim Text #1.” (See Appendix) Students answered the Pre-survey, rating the trustworthiness of given claims in the text, based on baseline levels of epistemic vigilance. Students explained their reasoning for some ratings in open-ended responses (see Table 2). Students completed demographics survey. |

|

Day 2–7 Randomization (off-site) |

Randomized assignment of students to control or treatment groups, stratified by classroom. Assignments were programmed into Qualtrics and associated with each student's unique numerical identifier. | |

Treatment phase, submitted on Qualtrics (Day 8, on-site) |

Students reviewed Claim Text #1 without the critical reading guide (“Reading Activity”). Students wrote a short summary of Claim Text #1. | Students reviewed Claim Text #1 and completed the critical reading guide (“Reading Activity,” see Table 2) to support evaluation of Claim Text #1. |

| Students read Claim Text #2, answered Post-Survey (identical to pre-survey). | ||

| Dependent variable: | Attrited from start of post-survey | Attrited from end of study | ||||

|---|---|---|---|---|---|---|

| (1) | (2) | Control mean | (3) | (4) | Control mean | |

| Treatment | 0.00654 | 0.00613 | 0.106 | 0.0919*** | 0.0914** | 0.208 |

| (0.0191) | (0.0191) | (0.0255) | (0.0264) | |||

| R2 | 0.078 | 0.100 | 0.176 | 0.197 | ||

| N | 1081 | 1081 | 1081 | 1081 | ||

| Strata fixed effects | Yes | Yes | Yes | Yes | ||

| Baseline controls | No | Yes | No | Yes | ||

- Note: Each column contains the results of separate regression of the estimated effect of treatment assignment on attrition from the sample. The control mean is the unconditional mean (i.e., excluding controls and strata fixed effects). All models contain fixed effects for randomization strata and columns 2 and 4 contain baseline controls for demographic variables. Strata clustered standard errors in parentheses.

- * p < .05; **p < .01; ***p < .001.

| ITT post-survey score | LOCF post-survey score | Imputed post-survey score | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Dependent variable | (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | (9) |

| Effect of treatment | 0.118 | 0.078 | 0.077 | 0.082 | 0.051 | 0.049 | 0.101* | 0.076* | 0.068 |

| Standard error | (0.073) | (0.059) | (0.062) | (0.058) | (0.044) | (0.046) | (0.054) | (0.043) | (0.046) |

| R2 | 0.105 | 0.317 | 0.318 | 0.092 | 0.411 | 0.410 | 0.099 | 0.357 | 0.366 |

| N | 804 | 804 | 804 | 1064 | 1064 | 1064 | 1081 | 1081 | 1081 |

| Strata fixed effects | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Baseline controls | No | No | Yes | No | No | Yes | No | No | Yes |

| Pre-survey score | No | Yes | Yes | No | Yes | Yes | No | Yes | Yes |

- Note: Each column contains the estimated effect (standardized beta coefficient) of treatment on Post-Survey scores. Columns (1) through (3) contain results for participants with outcome data (ITT), columns (4) through (6) contain results using the Last Observation Carry Forward method of estimation, and columns (7) through (9) contain results from multiple imputation (using imputed data from the missing observations using demographic variables and pre-test scores when available). Strata clustered standard errors are reported in parentheses.

- * p < .10; **p < .05; ***p < .01.

5.3 Potential mediator variables

Through a baseline demographics survey we collected information on potential outcome-relevant factors that may mediate how participants experience the intervention. Student familiarity with web-based media, their prior content knowledge, interest in science and views on the text topics as well as their sociodemographic background may influence how they interact with the text and experience their assigned condition (i.e., treatment or control). Questions in the baseline demographics survey were developed and underwent cognitive pre-testing with other social scientists in the field of science education.

We gathered information on participants' self-reported media and information technology literacy, such as their perceived experience with reading online media articles, and their specific experience with online media articles in a school setting. These data provided insight into students' levels of background knowledge on media (McClune & Jarman, 2010) and information—computing—technology (ICT) literacy (Leu, Kinzer, Coiro, & Cammack, 2004). Because one's ability to process information plays a key role in whether critical thinking will be engaged (Petty & Wegener, 1999), we analyzed outcomes for heterogeneous treatment effects linked to differential prior experience with Internet media. While just over 80% of participants reported school-based instruction in reading online media, less than 60% of students reported having explicit instruction in reading science-based content online.

Further, because content knowledge and background knowledge on scientific reasoning (see Ryder, 2001) likely plays a role in the evaluation of scientific claims, and literacy is necessary for scientific literacy and comprehension of scientific texts (Norris & Phillips, 2003), participants were also asked about their current and past coursework in science and English Language Arts (ELA). We recorded specific courses that participants had completed and their current courses. Less than 20% of the sample reported enrolling in Advanced Placement or Honors level coursework in ELA and Science.

To capture participants pre-existing attitudes towards science and the article topic, we asked about their interest in science and attitudes of the safety of Western medicine, specifically pharmaceutical drugs and vaccines (i.e., text topic). Motivation is a key determinant of engagement in critical thinking and has been shown to influence a readers' evaluation of persuasive texts (Petty & Wegener, 1999). We captured students' self-reported interest in science and their attitudes towards the safety of Western medicine with Likert-scale items. Students expressed strong levels of trust, on average, in Western Medicine and pharmaceutical interventions (i.e., 3.6 out of 5.0 scale). They rated their personal interest in science lower, (i.e., 3.2 out of 5.0). We hypothesized that students who had higher confidence in the safety of Western medicine at baseline would be more critical of claims against its safety. In the results section we detail our analysis of heterogeneous effects based on differential motivation and pre-existing attitudes towards Western medicine.

Lastly, we collect sociodemographic information during the pre-survey on potentially outcome-relevant variables. Participants reported their identified gender, racial/ethnic identification, age, grade in school, and whether the English language was spoken at home. In Table 1, we provide descriptive statistics of the sociodemographic information collected in the baseline survey, in which students self-identified as White (25.6%), Black (6%), Hispanic/Latinx (41.2%), Asian-American/Pacific Islander (10%), Native American (0.8%), and other race/ethnicity (9.7%). 45.5% of students self-identified their gender as female, 54.5% as male, and 4.3% provided their own identification. Students were evenly distributed across grades 9 through 11 with enrollments of 30.1% in grade 9, 31.2% in grade 10, and 26% in grade 11. A minority of participants were in grade 12 (6.1%). Student participants ranged from age 14 to 19 years of age, with a mean of 15.6 years of age. Our sample represents a diverse group of students that mirrors the sociodemographic make-up of participants' schools and has greater proportions of students of color than national demographics.7

6 METHODS

6.1 Randomization design and group assignment

The experiment utilized a double-blinded 2-level stratified randomization design to ensure equivalent treatment and control groups (Bruhn & McKenzie, 2009). Because the study involved students from different grades, teachers, courses, and schools that may be subject to unique experiences within each class section (e.g., socioeconomic factors, learning experience), the randomization was conducted using each class as an individual stratum for treatment assignment whereby students in the same section were assigned to both the treatment and control conditions (Athey & Imbens, 2017). By eliminating potential sources of differences between course sections, stratified assignment increases our ability to detect smaller treatment differences than with other methods of randomization (Box, Hunter, & Hunter, 2005).8 Student demographics, interest in science, experience with Web media and attitudes towards Western medicine were used to randomize students at the individual level within course strata.

We randomized all potential respondents to a condition but omitted those who did not consent or were absent for the study period, yielding 547 participants in the control group and 534 participants in the treatment group (i.e., N = 1081). Randomization was checked for balance against these factors using an omnibus balance test (Hansen & Bowers, 2008), in which a regression was run with the treatment as the dependent variable on baseline characteristics, and we implemented a joint F-test to establish that all coefficients on baseline variables were jointly equal to zero. We found no significant differences in the baseline characteristics between treatment and control participants.9 In summary, randomization produced balanced groups, and subsequent treatment effects represent the causal effect of the intervention on dependent measures.

6.2 Experimental procedure and integration into classroom setting

A number of steps were taken to maintain the double-blind nature of the study and avoid spillover effects. We summarize the experiment timeline in Table 3. The experiment was integrated into classrooms in the last 2 months of the school year and was administered by their teacher with students assigned to individual devices (i.e., computers or iPads) with Internet access to the Qualtrics survey platform. To prevent students from revealing their assigned status, treatment and comparison activities were designed to fit similar amounts of class time. Furthermore, we reduced the likelihood of spillover effects for students taking different sections of the same course by implementing the intervention over the same time period for students taught by the same teacher.

Because any innovation may produce improved outcomes temporarily due to novelty effects, the timeline for the entire experiment was approximately 1 week with at least 7 days of time elapsed between the administration of the pre-survey to administration of treatment and post-survey. One day after the post-survey was conducted, the participants received a debrief letter from the researcher which discussed the veracity of the claims in the sample texts.

7 FINDINGS

7.1 Main outcomes: intent-to-treat effect

First, all participants with post-survey scores were analyzed in the original condition to which they were assigned to estimate the effect size based on the Intent-to-Treat (ITT) principle to yield an unbiased estimate of the effects (Duflo, Glennerster, & Kremer, 2007). Specifically, we included every participant who was randomized according to their treatment assignment, with great attention to issues of noncompliance and withdrawal (i.e., attrition) from the study. We report standardized regression coefficients therefore, our estimates refer to the number of standard deviations the response or outcome variable changes for every standard deviation increase in the exposure variable (Nieminen, Lehtiniemi, Vähäkangas, Huusko, & Rautio, 2013).10 Following standard practice, all regressions used strata fixed effects to account for randomization, and included baseline controls to improve precision.

In order to interpret the changes in epistemic vigilance as being causal (i.e., in response to the intervention), we needed to ensure that participants in the treatment and control groups were equal in expectation. We did this in two ways. First, we regressed the composite baseline epistemic vigilance measure on treatment assignment with fixed effects for strata and found that there was no significant difference in epistemic vigilance between treatment and control groups at the start of the study (β = −0.0476, SE = 0.0558, p = .550).11 Second, we checked whether the attrition rate differed across the treatment and the control group. Differential attrition may suggest that students in the treatment and control group have different characteristics (e.g., attendance patterns, underlying motivation) that may be related to the outcome of interest. We found no significant difference between treatment and control groups in the number of students who were missing a pre-survey score (β = 0.00613, SE = 0.0191, p = .749), indicating that participation rates did not compromise the study (see Table 4, Columns 1 and 2).

For our main outcomes we used the composite baseline and post-survey measure of epistemic vigilance to conduct endpoint analysis using an ordinary least squares (OLS) regression framework. The model included a main outcome effect for treatment assignment (i.e., 1 = intervention, 0 = control activity) and controlled for baseline epistemic vigilance, randomization strata fixed effects and the sociodemographic variables collected at baseline.

The results for the post-survey epistemic vigilance changes suggested that there was no change between treatment and control groups though the estimate is positive in magnitude (β = 0.118, SE = 0.073, p > .1, see Column 1, Table 5). We controlled for baseline characteristics and pre-survey epistemic vigilance scores and find no significant change between treatment and control groups (Columns 2 and 3, Table 5). In sum, when we examine participants with post-survey outcomes, the critical reading guide had no measurable effect on participants' critical awareness.

7.2 Auxiliary analyses

However, we found that attrition was greater in the treatment group than the control group at the end of the study, implying that adherence to the protocol in the follow-up was uneven. Attrition (i.e., students not completing the full post-survey intervention) was 20.8% in the control group (i.e., n = 114) and 30.0% (n = 163) in the treatment group. In total, 277 participants (i.e., 114 + 163) did not complete the entire post-survey measuring epistemic vigilance. Table 4 shows that this difference was statistically significant (β = 0.0919, SE = .0255, p < .001).12 In sum, differential attrition may have resulted in biased estimates of the intervention.

In response to the issue of differential attrition, we investigated possible mechanisms for differential attrition and conducted exploratory robustness analyses. Specifically, we employ two methods of estimation as robustness checks: Last Observation Carried Forward (LOCF) estimates, and multiple imputation estimates. In Table 5, we present the estimated effect of treatment assignment on epistemic vigilance using the intent-to-treat (ITT) and alternate approaches.

We implemented a single imputation method known as Last Observation Carried Forward (LOCF), in which the last observation is imputed for all missing observations at the remaining time points (Overall, Tonidandel, & Starbuck, 2009). This type of imputation provides a conservative estimate of the effect because it assumes that students with missing post-survey scores experienced no change in their epistemic vigilance from baseline. We observed a small increase in epistemic vigilance of less than one-tenth a standard deviation (β = 0.082, SE = 0.058, p > .1). Similarly, in models controlling for baseline characteristics and pre-survey score we find small increases in epistemic vigilance that did not meet conventional standards for statistical significance (Table 5, Columns 5 and 6).13 While imputation generally increases statistical power by eliminating missing data and thus increasing the sample size, limitations include increased risk of bias. As such, we employed an additional imputation method to assess the robustness of our results.

The second method, multiple imputation, replaced each missing value with a set of plausible values representing the uncertainty of the correct value (Yuan, 2010). Imputed scores were produced based on demographic variables (i.e., gender, grade level, whether the student speaks another language, race/ethnicity, etc.) and baseline epistemic vigilance scores when available.14 Compared to LOCF, multiple imputation offers smaller bias of the treatment effect, increased statistical power, avoids introducing additional error compared to single imputation methods (i.e., LOCF), and obtains better estimates of the standard errors (Baron et al., 2007). Using this method, we found increased epistemic vigilance for the treatment condition (β = 0.101, SE = 0.054, p < .1).15 However, we believe these auxiliary analyses are only suggestive of a positive effect of the critical reading guide to increase epistemic vigilance towards science misinformation.

7.3 Intervention compliance and participant characteristics

Given our finding that treatment participants experience higher rates of attrition compared to control participants we conducted two auxiliary analyses to better understand potential candidate mediators. First, we examined students' fidelity to the assigned control or treatment activity (referred to as “treatment fidelity”). Second, we examined the attributes of students who complied (i.e., completed all the treatment or control components) with the study. We found that students in the treatment condition were less likely to complete the intervention reading activity and that there were no differences in treatment fidelity associated with student sociodemographic characteristics. Lastly, we analyzed time spent on the treatment and control activities. We found that treatment participants were less likely to complete the activity yet overall, they spent more time on the intervention activity compared to the control condition.

To examine fidelity to the assigned condition, we analyzed the students' text output in the intervention or control reading activities through a (1) systematic text analysis for English words in the open-ended items of the activities, and (2) human qualitative coding for the presence of text in these items. Adherence to the protocol was strong, 76.2% of the control group and 75% of the treatment participants finished the beginning of their activities. Both systematic text analysis and human qualitative coding suggested that there was no difference in attrition between groups that completed the start of the intervention activity (β = −0.0122, SE = 0.0226, p > .05). However, we found a difference between treatment and control groups in the completion of the multiple-choice questions, (β = −0.114, SE = 0.0274, p < .001), as well as the completion of the open-ended questions (β = −0.318, SE = 0.0273, p < .001). In sum, students were significantly less likely to finish the treatment activity than the control activity, suggesting that the attrition stemmed from some element of the treatment activity (e.g., length, formatting, or difficulty).

We might be concerned that student baseline characteristics may have influenced participants' engagement with the intervention protocol. We examined available demographics including age, gender, race/ethnicity, and grade level in addition to self-reported interest in science and their view and trust in Western medicine. By examining quality of student responses through systematic text analysis and conventional statistical tests to detect differences in average baseline characteristics related to fidelity, we found no indication of differences based on the aforementioned attributes, suggesting that these characteristics were not associated with fidelity to assigned condition or non-compliance.

Participant fidelity to their assigned condition was lower for treatment participants and we hypothesize that this lower level of compliance was due to longer length and cognitive complexity of the treatment protocol compared to the control condition. Auxiliary analyses suggest that all treatment participants, regardless of fidelity to their assigned condition, spent more time on the intervention reading activity. Treatment participants who completed the intervention reading activity spent nearly twice as long as control participants. Design implications are considered in our discussion.

7.4 Instrumental variable estimation by 2-stage least squares regression and local average treatment effect

We may be concerned that controlling for observed confounding covariates through regression is not sufficient for calculating valid causal estimates because of unobserved attributes that could drive differences in outcomes across groups (Gelman & Hill, 2007). Our findings suggested that treatment fidelity, or whether the students finished at least the beginning of the intervention reading activity, could be used as an instrumental variable (Duflo et al., 2007) to estimate the effect size of the treatment for students who complied with the activity using 2-Stage Least Squares (2SLS) Regression in which we estimate β2SLS by isolating the variation that was not correlated with the error term. Use of an instrumental variable is appropriate when two assumptions are true—(1) there is causal effect of the instrument on the independent variable, and (2) there is no correlation between the instrumental variable and the residual.

Following our main specification, the 2SLS regression model included strata fixed effects and controlled for baseline characteristics.16 We found a moderate effect for the treatment intervention for participants who complied with the protocol (β = 0.316, SE = 0.146, p < .05).17 In sum, the results of both the ITT and 2SLS analyses yield a conclusion that there were increases in epistemic vigilance due to the intervention, and more specifically, these effects appear to be driven by those students who completed the intervention reading activity.

We acknowledge that the 2SLS estimates are not a true average treatment effect because we only consider the effects for those compelled to comply with the treatment assignment (i.e., compliers). While some argue that ITT effects are more interesting for public policy because they represent imperfect compliance in real world settings, it is also important to understand the effect of the specific intervention when they are implemented as intended and compare them to ITT estimates (i.e., 2SLS estimates). As such, exploring both the ITT and 2SLS estimations provided a more holistic understanding of the intervention's effects.

7.5 Other measured variables and heterogeneous effects

We may be concerned that the intervention protocol had heterogenous effects that were mediated by participant characteristics. In particular, we hypothesized that differential trust in Western medicine and participant's content knowledge could influence the interventions efficacy at eliciting changes in epistemic vigilance. Participants with more science content knowledge and more experience critically engaging written text may respond to the critical reading guide. We find that the treatment protocol did elicit larger impacts for older students (i.e., 11th graders) though other self-reported indicators that might be typically associated with increased content knowledge and critical reading ability (i.e., coursework in science, coursework in English language arts, AP/IB or Honors courses) were inconclusive due to sample size constraints.

Prior research suggests that educational messages have more of an impact when recipients' beliefs are not yet established. We are able to test this hypothesis by using our baseline measurement of attitudes towards pharmaceuticals and vaccines to assess whether participants who reported more neutral baseline beliefs responded to the treatment intervention differently. Indeed, we find that treatment participants relative to control group members who expressed more ambivalence at baseline (i.e., neither weak nor strong confidence in Western medicine) experienced larger improvements in epistemic vigilance as a result of their participation (β = 0.242, SE = 0.093, p < .05). Such findings are consistent with research in science communication and highlight that it is difficult to change people's views of scientific information once their perspectives have been shaped (Bruine de Bruin & Wong-Parodi, 2014; Lewandowsky, Ecker, Seifert, Schwarz, & Cook, 2012). This has implications for timing instruction to increase epistemic vigilance.

8 DISCUSSION

This study provided a rare opportunity for students to confront science information as potentially false claims—a context that students encounter increasingly often in our contemporary information landscape. Furthermore, this study is one of few that experimentally tests the effects of an intervention to support critique and evaluation in a science classroom setting, building upon the extant body of literature on the pivotal role of critique in the assessment of scientific claims. Our results highlight four key points.

First, students' epistemic vigilance was not significantly different between the treatment and control group, but this was mediated by significant attrition in the treatment group. This finding highlighted the need for improvements in the intervention's design and length, as most of the attrition occurred while students completed the intervention activity. Our ad hoc analyses point to several design features of the intervention that may mediate improvements in epistemic vigilance. For example, the lengthy reading guide may have been plagued by slow or unstable Internet connections at school sites. This could be improved by presenting it on shorter consecutive web forms in order to load reliably. Other design issues that may have impacted intervention compliance or contributed to respondent fatigue were the length of the form, placement of visual elements, and usability of the form on various devices and browsers (Jarrett & Gaffney, 2009; Wroblewski, 2008).

Second, when accounting for treatment compliance, there was an increase in epistemic vigilance, which suggests that inducing students to critique the text may be effective in raising critical awareness in the face of misinformation. We found positive effects for students who completed the critical reading intervention, equal to an effect size of 0.10. This is the equivalent of a participant increasing their epistemic vigilance by moving from the 50th to the 60th percentile of the sample distribution. This is a small effect, especially given that the effect was detected using a specialized instrument to measure epistemic vigilance and the outcome was measured close in time to the intervention (Kraft, 2020). However, this intervention would also be considered a “light-touch” intervention—classroom teachers did not receive extensive professional development to conduct the intervention and the intervention itself (i.e., a critical reading scaffold) took less than a single class period to implement. This intervention was low-cost, highly scalable, and transferrable to other subjects (e.g. civics education). Other recent classroom-based interventions in the areas of source evaluation (Bråten et al., 2019) and evaluation of lines of evidence (Lombardi et al., 2018) have achieved larger effect sizes (though these studies are difficult to compare given different dependent variables, measures, and statistical procedures); however, these interventions were intensive, requiring professional development for teachers and multiple class periods for students. In sum, the present work can be considered as proof of concept—when students were prompted to “think slow” with a brief reading guide, they were more vigilant against misinformation if they engaged fully with the activity. Further research is needed to determine whether these are lasting effects or whether additional training or instructional hours might increase the magnitude of the effect. Certainly, this finding supports previous study showing that the act of priming students to be vigilant of misinformation may work to create an “inoculation effect” against being duped, and reduce susceptibility to misinformation before it is encountered (Cook, 2016; Lewandowsky et al., 2012). Furthermore, this intervention has the potential to be synergistic with other educational activities that support student engagement with epistemic vigilance of scientific claims, particularly in evaluating plausibility of claims (see Lombardi et al., 2013; Lombardi et al., 2018) and sourcing (see Bråten et al., 2019; McGrew et al. 2019).

Third, an examination of heterogeneous effects of the intervention suggested that the impacts were prominent for more advanced students (i.e., 11th grade students) which suggests the treatment may be more effective for students who have accumulated a threshold level of background knowledge or experience with reading science media in a Web-based context. More research on these underlying forms of background knowledge that may inform epistemic vigilance is necessary to address modifications to the intervention based on grade level, and to refine the necessary support for students to critique science information proficiently.

Fourth, we found that students who were initially uncertain of their attitudes towards the topic of the article—in this case, modern medicine—became more skeptical of the false claims. This finding suggests the treatment may be more impactful for those who have less established beliefs, and thus, are most prone to changing their views. This finding aligns with theories on persuasion that suggest when issues touch upon an individual's values or deep-seated attitudes, they are likely to be biased and less likely to process arguments rationally (see Wood, Kallgren, & Preisler, 1985). Given increasing societal challenges of the “post-truth” era and the public's tendency to rely on feelings over evidence, the impact of this treatment bears real-world value for students as they partake in civic decision-making as adults. Additionally, this conclusion also supports previous theoretical work on science literacy, as well as civics education. Taken together, this finding highlights the pressing need to teach critical thinking during compulsory education, as our perspectives become more established in adulthood.

Overall, the findings of the present study suggest that supporting students in critical thinking, specifically by inducing critique of scientific claims, may be effective in raising epistemic vigilance against erroneous information. The main limitation is thus, attrition, which affected our ability to detect outcomes with statistical precision, particularly in our subgroup analyses. Another potential limitation was that the intervention was given to students in a digital form and students were unable to physically mark the text as they read it Thus, students may have been less engaged with the reading process, but presenting the activity on paper would have put more burden on participating teachers. In sum, future research testing a similarly designed intervention should test the proposed intervention with a focus on design elements and usability to help inform improvements that would facilitate student engagement.

Our study adds to the growing literature that underscores the value of critique in argumentation and specifically, challenging flawed scientific arguments—an area that continues to be neglected in science education. Additionally, the tested intervention has potential for supplementing existing educational scaffolds for critical thinking in science. Finally, the ability to critique and evaluate the validity of claims about science is a valuable skill for young people to engage in future civic participation regarding science issues (i.e., vaccinations, climate change, public health measures to fight global pandemics, etc.). The personal choices they make involving the interpretation of potentially questionable science information will have consequential political and social impacts. During a time period in which wide-reaching technology can instantly spread truths and falsehoods alike, it is imperative to educate today's youth to be vigilant, before “our knowledge is a receding mirage in an expanding desert of ignorance (Durant, 2011).”