Abstract

Grit, a non-cognitive skill that indicates perseverance and passion for long-term goals, has been shown to predict academic achievement. This paper provides evidence that grit also predicts student outcomes during the challenging period of the Covid-19 pandemic. We use a unique dataset from a digital learning platform in the United Arab Emirates to construct a behavioral measure of grit. We find that controlling for baseline achievement, students who were grittier according to this measure before the pandemic, register lower declines in math and science scores during the coronavirus period. Using machine learning, behavioral data obtained from the platform prior to the pandemic can explain 77% of the variance in academic resilience. A survey measure of grit coming from the same students, on the other hand, does not have significant predictive power over performance changes. Our findings have implications for interventions on non-cognitive skills, as well as how data from digital learning platforms can be used to predict student behavior and outcomes, which we expect will be increasingly relevant as AI-based learning technologies become more common.

Similar content being viewed by others

Introduction

Grit is an important non-cognitive skill that has been shown to predict achievement outcomes including educational attainment, academic performance, and retention1,2. In the school context, grit has a significant role on outcomes over and above cognitive skills, and can have large multiplier effects, given that students who give up early have fewer chances to recover. Recognizing this, in many countries, educational policymakers and NGOs implement programs to foster grit in the school environment, especially with “growth mindset” interventions that teach students the malleability of skills through effort and perseverance3,4. In addition to its association with performance at school, the non-cognitive skill of grit can also be important in predicting resilience in the distance learning context during the Covid-19 pandemic. The challenges brought about by reduced access to teachers and peers in remote learning likely impaired the support system for students, changed the interaction of teachers, students and learning content5, and made self-regulated learning more central6. Motivating oneself when tackling potentially difficult learning tasks can be more challenging with remote learning, in the absence of direct interactions with teachers and peers. Indeed, recent evidence shows that even in “best-case scenario” countries with favorable conditions, students have experienced learning losses with remote instruction during the pandemic7.

In this paper, we use unique data from an online educational platform in the United Arab Emirates to explore whether the non-cognitive skill of “grit” predicts performance changes in math and science subjects during the coronavirus period, among a sample of 5th-9th graders. The platform was used for blended learning in K-12 public school classrooms to deliver core curriculum before the pandemic. With the switch to remote education due to Covid-19, it became the sole remote learning environment in the country for public K-12 schools. In this context, we utilize two alternative pre-pandemic measures of grit, one coming from a survey and one coming from actual behavior in the form of students’ response to setbacks experienced within the digital learning platform, to predict performance changes during the pandemic.

How to measure non-cognitive skills accurately is a major issue that has interdisciplinary relevance. In much of the literature, grit has been measured by the Duckworth-Quinn grit scale8. Measured this way, it has been shown to correlate with a wide range of outcomes such as retention and school performance, although there are also studies that have found null effects9. However, survey measures tend to be prone to demand effects or social desirability effects10, which may be particularly concerning in an adolescent student sample. Experimental economists have recently sought to construct behavioral measures of grit, using external, dynamic tasks that require real effort11. While such measures have been shown to have strong predictive power over success, implementing and using them at a large scale may be difficult in the regular classroom environment, as they tend to require the use of material rewards and implementation by external researchers. In this respect, using naturally occurring data from a digital learning platform enables us to obtain a unique measure of “behavioral grit” that would be difficult to obtain in the traditional learning environment and possible to use more widely, with the growing integration of digital learning into education. Specifically, we are able to observe “after-hours” studying and practice by students, capturing sustained voluntary effort, which allows us to define a measure of “revealed” grit in terms of the response to performance setbacks experienced on the platform. Using this behavioral measure along with a well-known survey measure in the same sample, we are thus able to make the contribution of comparing the predictive power of survey measures of grit and the behavioral measure of grit from platform data, both coming from the pre-Covid period, on student performance and behavior during Covid-19.

Our data confirms that the pandemic has led to significant declines in performance in math and science subjects, in line with studies showing that learning has been disrupted during the Covid-19 period. We find that the survey measure of grit8 has limited power in predicting performance in our sample, especially during the coronavirus period, and importantly, it does not predict the declines in performance. We hypothesize that “revealed grit”, measured using behavioral data coming from the digital platform, may be a better predictor of the performance response. Specifically, we hypothesize that students whose behavior before the pandemic period was grittier, register lower declines in academic performance during Covid-19, controlling for baseline diagnostic scores. We indeed find that revealed grit, unlike or stronger than survey-based measures: 1) predicts both /pre-Covid and after-Covid performance on the digital platform in math, 2) predicts the change in performance, with grittier students registering lower declines in performance in math and science. Finally, we show that pre-Covid behavioral data in the digital platform contains significant information to explain academic resilience: up to 77% of the variance in declines in performance in math and science during the Covid-19 period can be explained using machine learning.

The rest of the paper is organized as follows. In the next section (Results), we present the findings. The following section puts forward a discussion, and in a Methods section, we put forward details about the data and empirical methodology, as well as the construction of the behavioral grit measure.

Results

Performance changes and Covid-19

As expected, the Covid-19 period has led to an overall decline in performance, in both math and science subjects. Table 1 shows that in math, there is an about 11 points decline in the average score on the platform, and a similar decline of 10 points in science, for both genders. These declines are significant at the 1% level (p < 0.0001 for math and science in t tests).

Grit measures and school performance: pre- and post-Covid

The “behavioral grit” measure we construct is based on the student’s response to a performance that deviates in a negative way from her earlier average performance (please see the Methods section for details). We interpret this fall as a “setback”, and the student’s positive response to this setback (higher effort exerted out of school hours following the setback) as “grit”. Table 2 shows that there is a strong correlation between behavioral grit and student performance measured in overall platform scores in math, controlling for baseline diagnostic test scores which can be considered as an objective baseline performance measure. One standard deviation of behavioral grit is associated with 4.6 points higher math scores prior to covid relative to an average of roughly 50, which is equivalent to 50% relative to the mean, and the association is significant (p < 0.05). For science, grit is not strongly associated with test scores prior to Covid. Note that grit’s stronger association with math is expected, given that students tend to find math subjects particularly difficult, and consistently with this, grit has been shown to have the most relevance in math performance11. Girls in this sample perform 42 points higher on math tests, and 37 percent higher on science tests (p < 0.05). The diagnostic score for each subject, as expected, is also positively associated with platform performance (p < 0.01 for both subjects). After Covid commenced, grit is associated with both math and science test scores (p < 0.01) at a substantial 15 points in both subjects. Of note, the gender gap shrinks for the math field but remains the same for the science field.

In comparison, Table 3 shows that survey grit is only associated with one of these four measures of student performance—0.8 points in science prior to Covid (p < 0.10). Notably, the association with gender here is more statistically significant in comparison to Table 1. Moreover, science performance may be picking up certain characteristics of students that are associated with self-reported grit, however this association is small and disappears as a significant factor in the post-Covid period.

To visualize this comparison, we present a figure that includes the coefficients for behavioral grit and survey grit side-by-side in Fig. 1.

This figure presents coefficient plots comparing the effects of behavioral grit and survey grit on academic performance in math and science, both before and after the onset of the Covid-19 pandemic. Derived from regression analyses controlling for baseline diagnostic test scores and gender, these plots illustrate the magnitude and statistical significance of associations between grit measures and academic resilience. Behavioral grit is measured by students’ positive responses (increased effort) to performance setbacks, while survey grit is self-reported. The figure allows for direct comparison of how these different grit measures relate to academic performance across subjects and in response to the pandemic’s disruption.

Grit measures and change in performance post-Covid

Next, we explore non-parametrically the relationship between behavioral grit and academic resilience (change in overall test scores, as used above, during Covid-19). A significant predictive association for behavioral grit can be seen in binned scatterplots in Fig. 2 (math) and Fig. 3 (science), which condense the information from a scatterplot by partitioning the x‐axis into bins and calculating the mean of y within each bin.

This figure presents binned scatterplots illustrating the correlation between pre-Covid behavioral grit and changes in math scores, demonstrating academic resilience in mathematics. This visualization technique divides the independent variable (pre-Covid behavioral grit) into equal-sized intervals or bins, calculates the average change in math scores within each bin, and plots these averages against the bin midpoints. This method reduces visual noise and helps identify overall trends that might be obscured in a standard scatterplot, potentially revealing nonlinear relationships. The plot’s pattern indicates the correlation between pre-pandemic grit and math score changes, with the slope showing the direction and strength of this relationship. The figure helps demonstrate whether students’ grit, measured by behavioral indicators before the Covid-19 pandemic, correlates with their resilience in math performance. The number of bins used balances granularity with noise reduction, affecting the plot’s detail and clarity. This visualization is generated using the statistical software Stata.

This figure presents binned scatterplots illustrating the correlation between pre-Covid behavioral grit and changes in science scores, demonstrating academic resilience in science. This visualization technique divides the independent variable (pre-Covid behavioral grit) into equal-sized intervals or bins, calculates the average change in science scores within each bin, and plots these averages against the bin midpoints. This method reduces visual noise and helps identify overall trends that might be obscured in a standard scatterplot, potentially revealing nonlinear relationships. The plot’s pattern indicates the correlation between pre-pandemic grit and science score changes, with the slope showing the direction and strength of this relationship. The figure helps demonstrate whether students’ grit, measured by behavioral indicators before the Covid-19 pandemic, correlates with their resilience in science performance. The number of bins used balances granularity with noise reduction, affecting the plot’s detail and clarity. This visualization is generated using the statistical software Stata.

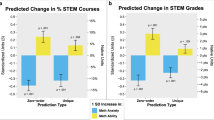

Table 4 shows, in regression form, that survey grit is essentially not correlated or slightly negatively correlated with resilience during Covid-19, where resilience is measured as the performance change, defined as overall test scores (as above) after Covid minus overall test scores pre-Covid. In contrast, behavioral grit is associated with 0.1 points greater resilience for math and science, respectively. The lack of similar predictive associations for survey grit can also be seen in Figs. 4 and 5, which present the non- parametric relationship between survey grit and academic resilience in math and science test scores, respectively. To further visualize this comparison, we present a figure that includes the coefficients for behavioral grit and survey grit side-by-side in Fig. 6.

This figure presents binned scatterplots illustrating the correlation between behavioral grit and changes in math scores, our measure for academic resilience in mathematics. This visualization technique divides the independent variable (behavioral grit) into equal-sized intervals or bins, calculates the average change in math scores within each bin, and plots these averages against the bin midpoints. This method reduces visual noise and helps identify overall trends that might be obscured in a standard scatterplot, potentially revealing nonlinear relationships. The plot’s pattern indicates the correlation between grit and math score changes, with the slope showing the direction and strength of this relationship. The figure helps demonstrate whether students’ grit, measured by behavioral indicators, correlates with their resilience in math performance. The number of bins used balances granularity with noise reduction, affecting the plot’s detail and clarity. This visualization is generated using the statistical software Stata.

This figure presents binned scatterplots illustrating the correlation between survey grit and changes in science scores, our measure for academic resilience in science. This visualization technique divides the independent variable (survey grit) into equal-sized intervals or bins, calculates the average change in science scores within each bin, and plots these averages against the bin midpoints. This method reduces visual noise and helps identify overall trends that might be obscured in a standard scatterplot, potentially revealing nonlinear relationships. The plot’s pattern indicates the correlation between grit and science score changes, with the slope showing the direction and strength of this relationship. The figure helps demonstrate whether students’ grit, measured by survey responses, correlates with their resilience in science performance. The number of bins used balances granularity with noise reduction, affecting the plot’s detail and clarity. This visualization is generated using the statistical software Stata.

This figure presents coefficient plots comparing the effects of behavioral grit and survey grit on changes in academic performance in math and science after the onset of the Covid-19 pandemic. This visualization is derived from regression analyses measuring resilience as the change in overall test scores from the pre-Covid to the Covid periods. The four bars display the coefficients for behavioral grit and changes in math and science scores, and survey grit and changes in math and science scores. The magnitude of each bar indicates the strength of the association between the respective grit measure and academic resilience. The figure illustrates the contrasting associations between behavioral versus self-reported grit with students’ academic performance changes during the pandemic, and allows for a direct comparison of how the two different grit measures predict the performance response.

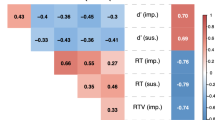

To examine total variance explained by behavioral grit measured using the digital platform, we use an elastic regression model in which we include all clicks recorded by the platform, pre-processed into 400 categories. The detailed set of observables we have for this test include students accessing different types of instructional content on the platform for each subject (e.g. watching a video, accessing course-related reading material, doing exercises, starting/ending tests to check understanding), number of times each content is accessed, and the time spent on the platform. To aid interpretability, we first use principal components analysis on these other variables. Note that before the elastic regression model, we orthogonalize each explanatory variable to the behavioral grit measure by projecting each successive variable on the behavioral grit variable and taking residuals. Then the elastic regression model is run by using the uncorrelated variables (i.e., residuals).

In Table 5, we report the multivariate regression including only the vari ables selected by elastic regression. The results indicate that 77% of variance in resilience is explained by behavioral data measured in the platform. The data, while inadequate to definitively infer causality, thus show the potential for high-dimensional revealed preference measurement of non-cognitive skills.

Discussion

The coronavirus pandemic has brought a unique set of challenges for education. The learning loss incurred due to the pandemic by students in grades 1 to 12 is estimated to translate to about 3 percent lower income over the entire lifetime12. In this paper, we show that an individual non-cognitive skill, grit, can predict how large of a performance loss the student would experience due to the pandemic.

Research on the effects of Covid-19 has shown that personality traits can predict behavioral responses such as hoarding or compliance with guidelines13, as well as with coping responses14. Johnston et al. 15 show that Covid-19 led to declines in mental health, and this decline is not predicted by financial resources but by the non-cognitive skill of self-efficacy15. We show that in the educational setting, the non-cognitive skill of grit predicts a less negative performance response to the challenges faced during the pandemic. In this sense, we provide new evidence for why fostering grit in student samples is important for achieving better learning outcomes. The finding is in line with the recent literature showing associations between grit/resilience and the response to the pandemic. For instance, studies have found better mental health and satisfaction outcomes16 and a lower level of academic/career concerns17 among students during the pandemic, with the former result also extending to samples other than students, e.g. health personnel18. Similarly, a link between growth mindset (a precursor for grit) and lower perceived stress and higher academic engagement/satisfaction outcomes among students in the Covid-19 period has been documented19. Finally, two studies with smaller samples show a positive link between a survey measure of grit and exam performance during e-learning20,21, indicating that the expected positive link between grit and achievement also extends to the pandemic period.

Our behavioral measure of grit is based on identifying performance declines, and utilizing the unique advantage offered by the data recorded in voluntary after-school hours activity on the platform to measure the revealed effort response. In this sense, our measure captures the “perseverance of effort” aspect of the construct of grit. Our results are therefore consistent with Crede et al. and Ponnock et al. 22,23, who show that it is the perseverance facet of grit (in contrast to consistency of interest) that has explanatory power over academic performance.

Our findings suggest that programs that aim to build grit early on in the educational environment (e.g. through promoting growth mindset), can also have positive effects on resilience and the academic performance response in times of crisis3,24. To the extent that such crises also exacerbate educational inequalities25, teaching grit could reduce the widening gap between students coming from different social strata. The results also underscore the potential for digital learning platforms not only as facilitators of learning but also as a valuable tool for both assessing and fostering non-cognitive skills, which can indirectly lead to better learning outcomes. That is, such platforms, by design can be used, to not only deliver learning content but also to collect valuable behavioral information that can provide a foundation for customized learning for students with heterogeneous levels of non-cognitive skills.

The finding that behavioral data can explain a significant portion of the variance in academic resilience suggests that data collected from digital learning platforms can be valuable for measuring non-cognitive skills, as well as predicting students’ future performance or response to shocks. Using digital data on behavior and performance, AI-based remote learning technologies can therefore be used to identify not only students who are struggling in terms of comprehension but also those who lack resilience or motivation, and to actively respond by customizing the learning experience for different students by adapting levels/sequences of difficulty, presenting content that requires sustained effort, or providing personalized motivational feedback tailored to individual performance patterns. We expect that such strategies will be especially relevant with the growing use of AI-based online learning technologies, and may create significant benefit in improving student engagement and achievement. Moreover, features that promote gritty behavior (e.g. messages about growth mindset, which has been shown to be effective in several interventions) can be directly incorporated into digital learning environments to support self-regulated virtual learning. Overall, digital learning platforms can be used to collect valuable behavioral information that can equip educators with insights into their students’ engagement and perseverance and can support more targeted interventions to cultivate grit, both within and beyond the classroom setting. Finally, our results underscore the importance of constructing revealed preference measures of non-cognitive skills, and show how self-reported survey data can be complemented with high-dimensional revealed preference data for predicting educational outcomes.

Our study has several limitations: we should note that the survey measure of grit may have drawbacks in the sense that students may not have proper incentives to respond truthfully, and factors such as social desirability or boredom may affect answers and introduce bias or noise. From an external validity perspective, our data come from middle-schoolers in a set of schools in the United Arab Emirates, and we cannot exclude the possibility that the results are country-dependent or age-dependent. That said, we believe that external validity may be more of a concern in the results regarding the survey grit measure rather than behavioral grit. If the students in our sample, for example, are more likely to report socially desirable answers in self-reports than those in other countries or in other age groups, this may prevent a direct generalization on the relative costs from using survey vs. behavioral data in other contexts. Still, since the measure from the online educational platform reflects real actions, it is not prone to social desirability effects or reporting confounds, and the negative association between the behavioral measure of grit and performance declines are likely to be more generally relevant. Finally, we should note that the results may also depend on how the pandemic was experienced in the country and the level of integration of digital learning into the normal school curriculum. On these fronts, we believe that our estimates may be conservative, and results may have been even more pronounced in other settings. This is because the digital platform we study was already partly integrated into the school curriculum and may have facilitated a level of familiarity with remote learning. If this were not the case, the switch to online education may have even higher costs and adaptability issues, possibly leading to higher performance declines with the pandemic. Similarly, United Arab Emirates had a strict testing and vaccination policy, which kept case counts under control, which may have limited the traumatic experience of the pandemic in the country. It would be an interesting avenue for further research to study the relationship of behavioral measures of non-cognitive skills such as grit and educational engagement and outcomes as well as responses to different shocks using data from different settings, to explore the boundaries of the results we find. Future research should also consider the measurement of other non-cognitive skills and aspects of motivation from behavioral digital learning data, and explore the potential of using such data for both assessment/evaluation of individual non-cognitive skills and designing related targeted interventions. Along the latter dimension, it is possible to envision many different types of practical applications, ranging from informational interventions for students, parents and schools, to motivational interventions based on embedding adaptive content into learning platforms. We expect that such applications would also provide fruitful ground for collaborations between academia and private/public institutions that work in the domain of digital education, as the conclusions of research on these platforms using data provided by these stakeholders may provide valuable insight into the optimal design and efficient use of these platforms to maximize student learning.

Methods

Our data come from a widely used digital learning platform in the United Arab Emirates. The data are provided by Alef Education, a global K-12 education technology company that provides K12 students and teachers with core curriculum and learning analytics through blended learning settings. The platform also provides experiential learning that enables students to apply and transfer their newly acquired skills, and practice lessons for further skill mastery, with the aim of creating an effective instructional and classroom model. In the pre- pandemic period, the platform was used for blended learning in K-12 public school classrooms, delivering core curriculum. After the pandemic, with the switch to complete distance learning, the platform became the sole environment for teaching and learning in the country, in conjunction with communication tools such as Zoom. The educational technology organization that has developed the platform conducts diagnostic performance tests and surveys at schools in the United Arab Emirates as part of their periodic research and evaluation activities, with approvals from the Department of Education and Knowledge (ADEK) and relevant school principals prior to data collection. Students and teachers receive a thorough explanation of the purpose of any questionnaires or surveys. All data collected comply with government student privacy protocols and exclude personally identifying information. The dataset we use in the paper comes from a subset of students, for whom survey measures of grit and performance data in out-of-platform diagnostic tests before the pandemic period are available, in addition to data coming from the digital platform. All data used in this paper were provided to the authors as secondary, anonymized data.

Construction of the behavioral grit measure

There are several types of activities for students to engage in on the platform. For each subject (math and science), students can watch instructional videos, study digital course materials, and take online assessments to check their understanding of the material. The platform records every activity, from when the student started the activity to when it ended, how many assessments the student attempted, as well as all other login/logout attempts etc. Here, we define two important concepts that will be used in the current paper for analysis. We define a student’s “platform performance” as the average score of all assessments taken by the student within a specific period of time. These scores, and hence their averages, range from 0 to 100. We define performance metrics as above for each student and subject (math and science) in the pre/post-Covid periods separately. We also calculate the differences in score from pre- to post-Covid per subject, in order to see what might have impacted the change in performance.

The second variable we use is the number of attempts for a test made by each student. The intensity of engagement with the digital platform during school hours is usually determined by the teacher. For this reason, we focus on out-of-school hours, where it is completely up to the student to use the platform for learning or practice. Given that out-of-school hours activity is voluntary, students differ in how many times they attempt an assessment task. The number of times a student approaches an assessment task is defined as the “attempts”, which we use to define gritty behavior.

There are many ways to conceptualize how digital learning data can be used to measure grit. Our grit measure is based on the student’s response to a performance that deviates from her earlier average performance. We interpret this fall as a “setback”, and the student’s positive response to this setback (highereffort exerted out of school hours following the setback) as “grit”. Specifically, we define our main grit variable as follows. For each student and for every day in our sample (“Day t”), we calculate the difference between the student’s average performance in the past week (during the days t-7 to t-1) and performance on Day t. If today’s score is sizably lower than last week’s average score, that is, if there is an at least 5- point score decline from the previous week, we define this incidence as a “setback” where a behavioral grit response may be observed. We define the gritty response as an increase in effort, which we capture by the number of attempts the student made in their formative tests on the platform during out-of-school hours following the setback. Specifically, for each setback event, we calculate the difference between the number of out-of-school attempts on the following day (t + 1), and compare this with the previous week’s average attempt (t-7 to t-1). Note that we do not include weekends in the calculations, as the number of attempts is significantly lower. When Thursday is day t, then Sunday becomes t + 1.The behavioral grit variable takes the value of 1 if there is an increase in attempts, and 0 otherwise. Once we have the grit measure for a given day t, we take an average over all days in our sample, for both pre- and during-covid periods. Thus, we have, for each student, what percentage of the time out-of-school attempts increased after a sizable score decline. We interpret this as a measure of behavioral grit, which we calculate separately for math and science subjects. We then compare our behavioral grit measure with the well-known survey grit measure, based on Duckworth and Quinn’s “short grit scale”8, with questions reproduced in the Methods section.

We summarize the calculation of our behavioral grit measure below, and provide some numerical examples of the calculation in Fig. 7:

-

1.

Determine if there is a significant setback: The average performance of the subject from day t-7 to t-1 is compared to the performance on day t. If there is a significant drop in performance on day t, it is considered a “setback”.

-

2.

Assess gritty behavior, defined as a positive response to the setback in terms of attempts made: If a setback is observed on day t, the number of attempts made by the subject in out-of-school hours after day t is examined. If the subject increases their attempts following the setback as compared to his/her average attempts in the period before the setback, this is counted as gritty behavior. Note that grit is not defined when there is no setback.

-

3.

Behavioral grit is then calculated as the percentage of times when gritty behavior is shown in response to setbacks–for example, if there are setbacks on 6 days in the window taken and gritty behavior is shown twice, the behavioral grit score for that period is 2/6 = 0.33. Doing this calculation for each day for 8 weeks before Covid-19 and for 12 weeks during Covid-19, we calculate the average behavioral grit scores before and after the COVID-19 pandemic.

The figure gives concrete examples of the calculation of the behavioral grit measure. Panel a shows how setbacks are determined, and consequently, daily grit. Note that daily grit is not calculated when there is no setback. Panel b shows the calculation of the average behavioral grit for the pre- and post-Covid periods.

In our sample, we have 56 days of observations for the pre-covid and 84 days of observations for the during-covid period. Since we compare with an average from the previous week, we omit first-week observations both from pre and during-covid periods. Note that before the start of the “covid period” in our data, there was a 16-day break, where average activity on the platform is 90% less than both the pre- and post-covid periods. Therefore, we have 49 effective days for pre-Covid and 77 days for during-Covid. If performance does not decline sizably or increases on a given day, the grit variable for that day is not defined. Also, since there is little room for performance improvement for very high-performing students (whose daily score average is consistently higher than 90), these students are omitted from the analysis.

We should note that there may be other ways to define “behavioral grit” using such data. Our results are largely robust to a set of variations in the assumptions we make in the definition; for example, if we look at effort not only the day after, but two days after the setback, or if we define “setback” to be only when the student registers a “bottom 20%” performance based on his/her historic performance distribution.

The survey questions in the Duckworth-Quinn short grit scale are reproduced below:

-

New ideas and projects sometimes distract me from previous ones

-

Setbacks don’t discourage me. I bounce back from disappointments faster than most people

-

I have been obsessed with an idea or project for a short time but later lost interest

-

I am a hard worker

-

I often set a goal but later choose to follow a different one

-

I have difficulty keeping my focus on projects that take more than a few months to complete

-

I finish whatever I begin

-

I am diligent

Data

We use data coming from 1920 students, in 17 schools and 229 classes, out of which 1279 are female and 641 are male. The students are middle- and high schoolers, and the data include five grades: grades 5 to 9. To be specific, we have 391, 262, 488, 600 and 179 students in grade 5 to 9, respectively. Note that during our data period, there were only two students changing school, and these have been omitted from the analysis. In case of class changes, we take the last class that the student was enrolled in in the academic year as the relevant class. We have access to objective scores from a diagnostic test on math and science as well as a survey, which were all run before the pandemic, in Fall 2019. In addition, we have platform data from the period January 10 to March 5, 2020 (8 weeks), which we define as the pre-Covid period. The post-Covid period goes from 22 March, 2020 (the day when anti-Covid measures were taken in the UAE and fully remote schooling started), to June 13, 2020 (12 weeks). We omit the intermediate period March 5 to 22, 2020, as the schools were closed and there were very limited attempts on the platform. Note that the sample we use in this paper reflects the set of students, out of a representative sample of all Abu Dhabi public school students, who completed both the diagnostic test (mandatory) and the grit survey (optional). There may be selection in the sample in the sense that the lower tail in terms of motivation have likely been left out, but our results on the effect of grit may be considered as conservative in this sample.

In addition to the above variables, in additional analysis of revealed preference data on non-cognitive skills, we implement an elastic regression using 400 variables from the digital platform. These variables come from “click data” recorded on the platform, and include a detailed set of observables about students’ platform activity, such as accessing different types of instructional content for each subject (e.g. videos, reading material, exercises, tests) as well as the timing and duration of these activities. The machine-learning model reduces the size of coefficients to zero when they do not explain much variance, allowing the consideration of a wide range of potential explanatory variables. To reduce risk of confusion, we use principal components analysis (PCA), a technique for reducing dimensionality of datasets by creating new uncorrelated variables that successively maximize variance and minimize information loss. We use PCA on all of the features from the digital platform. We then report the elastic regression including only the variables selected by elastic regression.

Elastic regression is a statistical method used to analyze the relationship between a dependent variable and one or more independent variables. It combines two popular regression techniques: ridge regression and lasso regression. In traditional linear regression, the goal is to find a line that best fits the data points by minimizing the sum of squared differences between the predicted values and the actual values. However, when dealing with a large number of variables or when the variables are highly correlated, linear regression can lead to overfitting or unstable estimates. Elastic regression addresses these issues by introducing two penalty terms to the regression equation. The first term, borrowed from ridge regression, shrinks the coefficients of the variables towards zero, reducing their impact on the model. The second term, borrowed from Lasso regression, can set some coefficients exactly to zero, effectively performing variable selection. By combining these two penalties, elastic regression strikes a balance between reducing the complexity of the model and selecting relevant variables. It is particularly useful when dealing with high-dimensional data, where the number of variables is larger than the number of observations. This machine-learning model reduces the size of coefficients to zero when they do not explain much variance, allowing the consideration of a wide range of potential confounding variables so as to address concerns of possible omitted variable bias26,27.

We also use principal components analysis (PCA), a technique for reducing the dimensionality of datasets by creating uncorrelated variables that successively maximize variance and minimize information loss. Principal Component Analysis (PCA) is a statistical technique used to reduce the dimensionality of a dataset while retaining most of its variation. It is commonly used for feature extraction and data compression. PCA works by identifying the directions (principal components) in which the data varies the most. These principal components are linear combinations of the original variables and are orthogonal to each other, meaning they are uncorrelated. The first principal component captures the largest amount of variation in the data, followed by the second principal component, which captures the second largest amount of variation, and so on. By selecting a subset of the top principal components, we can effectively reduce the dimensionality of the data while preserving its essential structure. PCA has numerous applications in various fields, including biology, genetics, neuroscience, and image processing. We use PCA on all of the variables coming from click data from the digital learning platform, and then run a multivariate regression that includes the principal components selected by elastic regression along with our measure of behavioral grit.

Data availability

The data that used in the paper comes from students in public schools in the United Arab Emirates. The authors have received the de-identified data from ALEF Education. The students are minors and therefore the data is sensitive. Due to the legal agreement between ALEF Education and the Ministry of Education of the United Arab Emirates, the public sharing of data is restricted. Requests for data underlying the results in the study can be made to the data owner, United Arab Emirates Ministry of Education (https://www.moe.gov.ae/).

Code availability

The code is available upon request from the authors.

References

Duckworth, A. L., Kirby, T. A., Tsukayama, E., Berstein, H. & Ericsson, K. A. Deliberate practice spells success: why grittier competitors triumph at the national spelling bee. Soc. Psychol. Pers. Sci. 2, 174–181 (2011).

Eskreis-Winkler, L., Duckworth, A. L., Shulman, E. P. & Beal, S. The grit effect: predicting retention in the military, the workplace, school and marriage. Front. Psychol. 5, 36 (2014).

Dweck, C. Mindset: The New Psychology of Success (Random House, 2006).

Paunesku, D. et al. Mind-set interventions are a scalable treatment for academic underachievement. Psychol. Sci. 26, 784–793 (2015).

City, E. A., Elmore, R. F., Fiarman, S. E., & Teitel, L. Instructional Rounds in Education (Harvard Education Press) 30 (2009).

McCombs, B. L. The role of the self-system in self-regulated learning. Contemp. Educ. Psychol. 11, 314–332 (1986).

Engzell, P., Frey, A. & Verhagen, M. D. Learning loss due to school closures during the COVID- 19 pandemic. Proc. Natl. Acad. Sci. 118 (2021).

Duckworth, A. L. & Quinn, P. D. Development and validation of the Short Grit Scale (GRIT–S). J. Pers. Assess. 91, 166–174 (2009).

Zisman, C. & Ganzach, Y. In a representative sample grit has a negligible effect on educational and economic success compared to intelligence. Soc. Psychol. Pers. Sci. 12, 296–303 (2021).

Bertrand, M. & Mullainathan, S. Do people mean what they say? Implications for subjective survey data. Am. Econ. Rev. 91, 67–72 (2001).

Alan, S., Boneva, T. & Ertac, S. Ever failed, try again, succeed better: results from a randomized educational intervention on grit. Q. J. Econ. 134, 1121–1162 (2019).

Hanushek, E. A. & Woessmann, L. The Economic Impacts of Learning Losses (OECD Education Working Papers, 2020).

Zettler, I. et al. The role of personality in COVID-19-related perceptions, evaluations, and behaviors: findings across five samples, nine traits, and 17 criteria. Soc. Psychol. Pers. Sci .13, 299–310 (2022).

Volk, A. A., Brazil, K. J., Franklin-Luther, P., Dane, A. V. & Vaillancourt, T. The influence of demographics and personality on COVID-19 coping in young adults. Pers. Individ Differ. 168, 110398 (2021).

Johnston, D. W., Kung, C. S. J., & Shields, M. A. Who is resilient in a time of crisis? The importance of financial and non-financial resources. IZA Discussion Paper No. 13720 (2020).

Casali, N., Feraco, T. & Meneghetti, C. Keep going, keep growing: a longitudinal analysis of grit, posttraumatic growth, and life satisfaction in school students under COVID-19. Learn. Individ. Differ. 105, 102320 (2023).

Lytle, A. & Shin, J. L. Resilience and grit predict fewer academic and career concerns among first-year undergraduate students during COVID-19. Soc. Psychol. Educ. 26, 227–240 (2023).

Luceño-Moreno, L., Talavera-Velasco, B., García-Albuerne, Y. & Martín-García, J. Symptoms of posttraumatic stress, anxiety, depression, levels of resilience and burnout in spanish health personnel during the COVID-19 pandemic. Int. J. Environ. Res. Public Health 17, 5514 (2020).

Zhao, H., Xiong, J., Zhang, Z. & Qi, C. Growth mindset and college students’ learning engagement during the COVID-19 pandemic: a serial mediation model. Front. Psychol. 12, 621094 (2021).

Ghanizadeh, A. Higher education amid COVID-19 pandemic: Contributions from resilience, positive orientation and grit. J. Appl. Res. High. Educ. 14, 1670–1685 (2022).

Sulla, F., Aquino, A. & Rollo, D. University students’ online learning during COVID-19: the role of grit in academic performance. Front. Psychol. 13, 825047 (2022).

Credé, M., Tynan, M. C. & Harms, P. D. Much ado about grit: a meta-analytic synthesis of the grit literature. J. Pers. Soc. Psychol. 113, 492 (2017).

Ponnock, A. et al. Grit and conscientiousness: another jangle fallacy. J. Res. Pers. 89 (2020).

Yeager, D. S. et al. A national experiment reveals where a growth mindset improves achievement. Nature 573, 364–369 (2019).

Marina, M. & Bonacini, L. Coronavirus pandemic, remote learning and education inequalities. GLO Discussion Paper. No. 679, Global Labor Organization (GLO), Essen (2020).

Zou, H. & Hastie, T. Regularization and variable selection via the elastic net. J. R. Stat. Soc. B 67, 301–320 (2005).

Friedman, J., Hastie, T. & Tibshirani, R. Regularization paths for generalized linear models via coordinate descent. J. Stat. Softw. 33, 1–22 (2010).

Author information

Authors and Affiliations

Contributions

Idea and Research Conceptualization: S.E., D.L.C., T.E. and the ALEF team (A.N. and X.M.) contributed significantly to the conceptualization and design of the research. Write-up: The manuscript was primarily written by SE and DLC, with substantial input and review from T.E., A.N., X.M., and E.Y. Data Analysis: E.Y. took the lead in data analysis, with significant contributions from DLC, ensuring the integrity and accuracy of the data analysis process. All authors (S.E, D.L.C., T.E., A.N., X.M., and E.Y.) read and approved the completed version, and accept accountability for all aspects of the work.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Chen, D.L., Ertac, S., Evgeniou, T. et al. Grit and academic resilience during the COVID-19 pandemic. npj Sci. Learn. 9, 57 (2024). https://doi.org/10.1038/s41539-024-00265-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41539-024-00265-3